The Future of Work: Artificial Intelligence and teamwork

The Future of Work and AI

The Future of Work and Artificial Intelligence is discussed under the umbrella “A World Beyond HR”.

Rebecca Lundin, co-founder at Swedish Celpax, talks about Artificial Intelligence from a team perspective.

The Future of Work is still very much about humans… albeit heavily shaped by Artificial Intelligence.

Now, of course, there isn’t a clear view of how artificial intelligence will transform our workplaces.

What we do know is that - as always in history - companies and employees will have to adapt to the drastic changes which lie ahead.

Some changes are already here.

Some will soon come knocking on your company door.

Is your organization future-proofing?

How Artificial Intelligence and People Will Work Together in Teams

The German Think-Tank 2B Ahead is organizing a future of work conference.

At the event, international speakers will spark debates around topics like how Artificial Intelligence and people will work together in teams.

How do we get Artificial Intelligence to become team members who act in the best interests of your company?

We also need to future-proof our existing colleagues.

And focus on how to develop a growth mindset.

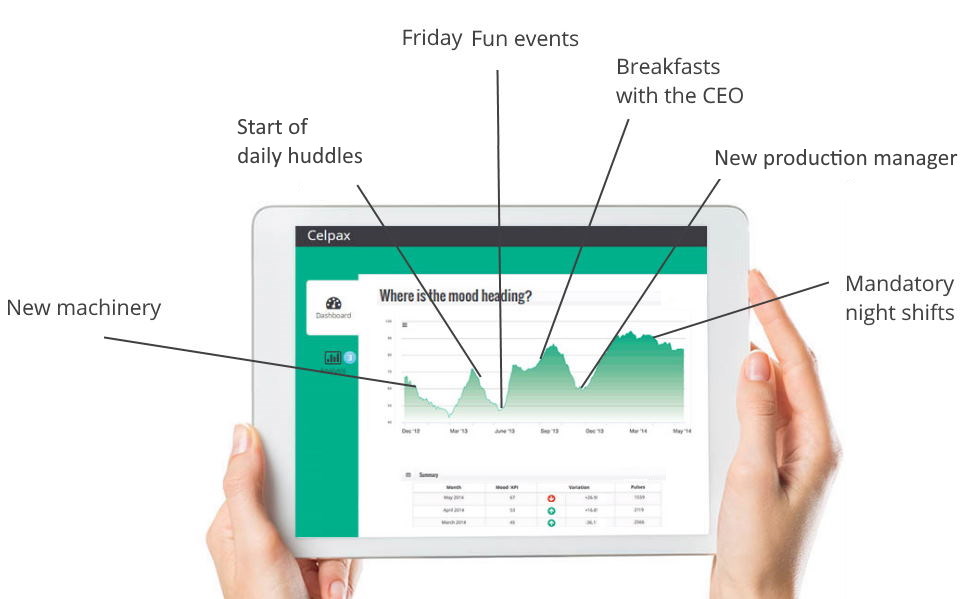

At Celpax our data shows that continuous improvement and an agile approach is key.

The Future of Work: How Artificial Intelligence and People Will Work Together in Teams

Artificial Intelligence and Decision Making

A burning topic these days is Artificial Intelligence and decision making.

What roles should AI have?

What are its competencies, its limits and where does it optimally complement us humans?

Which levels of decision-making authority make sense?

What are the benefits of artificial intelligence?

AI dramatically improves our analytic and decision-making abilities. It helps us get the “right” information at the right time.

From a decision making perspective, we are faced with questions like if it should be possible to lose one’s job on the decision basis of an algorithm.

Drawing lines is tricky.

And let’s not forget, human decisions aren’t perfect either. Most things that we are currently accusing AI of such as bias, could probably be said for humans too.

How does AI decide in an ethical dilemma?

There is a need to talk about the areas where AI best compliment us, humans.

And to determine tasks currently carried out by humans that could be replaced by AI.

Can you imagine AI as a colleague? Or even your boss?

So imagine that tomorrow all the processes are in place and you end up greeting your new digital team colleague called Artificial Intelligence.

What do both sides have to learn from each other, in order to successfully work as a team?

How do we get to know each other best?

Is it the same as having a human co-worker?

Can AI become part of the team if it never comes to lunch and talks about its hobbies?

“Machines are generally poor at understanding a person’s mood”, according to Lynda Gratton, founder of The Future of Work Research Consortium.

How do we get AI to “live” the values of our company?

Is it the same if the AI is your colleague, compared to the AI being your manager?

What are the chances and dangers of an automated boss?

And are we, as humans, ready for such a setting on an emotional level?

AI thinks as far as the data set it was trained for

AI is very efficient for certain things.

But it only thinks as far as the data set it has been trained for.

There is a need for massive training data.

Let’s talk about who trains it.

How do we overcome the potential bias in the training data like the machine training specialists detected at workplaces like Amazon?

Or facial recognition systems that recognize white faces with much higher accuracy than black faces:

“If the photo was of a white man, the systems guessed correctly more than 99 per cent of the time. But for black women, the percentage was between 20% and 34%.”

The list of examples like this is a bit too long.

Because we humans aren’t impartial.

So if we train them… the result most likely won’t reflect just how diverse our workplaces are.

Machine training means learning by examples.

What examples do we feed the data set?

This is key.

As we teach machines, they will adopt our imperfections. Our beliefs, moral, ideologies, etc.

However, this will all be adopted without an important filter - the moral compass to evaluate.

It’s hard to train intuition.

How soon before the AI can help us (and itself) to truly detect and improve biases?

What kind of training and what rules would be necessary?

As a colleague, do I have to know how it’s been programmed, or rather its programmer, in order to know what makes it tick?

Transparency

How the AI collects and processes data is an interesting angle.

What level of transparency is currently available in this area?

And if there is transparency, is it presented in such a form that non-programmers can make sense of it?

Or do we need Explainers (hey, AI detectives sounds more intriguing, right?) to interpret for us?

I.e. the right to explanation.

That way, you can easier determine when to trust the AI to make decisions on its own, without supervision.

And also to determine when you need to activate mechanisms to intervene when you see there is a problem.

This will also require training for humans… and an open mind.

Without a growth mindset, many workers will see their job go to colleagues (or new recruits) who have learned how to cooperate with machines.

A study from Accenture showed that “CXOs believe only 26 percent of their workers are prepared to work with AI—which explains why companies are not investing enough in skill-development programs”.

This test confirmed a human tendency to apply greater scrutiny to information when expectations are violated.

MIT’s platform called the Moral Machine did a crowd-sourced survey to find out how people, worldwide, though moral decisions should be applied when it comes to self-driving cars.

People were asked to weigh in on the classic ‘trolley problem’.

They all chose which car they thought should be prioritized in an accident. Do you save the pregnant woman in the car, or the group of elderly crossing the road?

The results showed a huge variation across different cultures.

Again, who is training the AI?

And with what culture lense?

Where are they based?

Where will the AI act?

PS. Take the test! Freakishly hard to determine.

Here in Sweden for example, cars are obliged to stop at any zebra crossing if a pedestrian is about to cross - independently if there is a traffic light or not. Would that add to the cultural differences? The Moral Machine’s goal is to help reflect on important and difficult decisions.

Respecting Human Workers Integrity

There is also the question of transparency the other way around.

With listening capabilities, what conversations might the AI pick up on in the workplace?

How will companies use that information and make sure human workers integrity and sensitive information is kept safe?

Add to this that we humans seem to want to maintain the illusion that AI truly cares about us.

Is our workplace law keeping up with our rapid technological advancement?

What regulations need to be revised for businesses?

What’s the background of those revising this law?

AI Onboarding

Do you remember your first day at work?

Our future AI teammates could be onboarded using a similar pattern to how trust is currently built between colleagues and managers.

Show them the ropes!

Start with trusting them with small tasks or routine tasks that are easy.

Tasks where stakes are small.

Once verified and when trust has been established, it can dive into real work and add complexity and importance as you go along.

AI as a coach - Not an automated boss

Let’s think of AI as a coach, which would be a more ethical approach.

Using machine learning and normalized workforce data, it can help you find out if you’re a good leader, colleague, etc, and determine the areas where you need to improve.

This way the AI can throw hard facts at you so you can find out about your own bias.

No one is perfect

Perhaps the person that you are about to promote to team leader is the one who generates the least revenue.

And is the least popular among the team members.

Or maybe there are things that you aren’t doing, that other leaders are.

Or activities that you’re not doing as effectively as others.

A lot of employees will still want to talk to a ‘human’ when it comes to sensitive issues at work.

To sum up:

Thomas Koulopoulos says:

“The challenge of artificial intelligence isn’t so much the technology as it is our own attitude about machines and intelligence.”

We need to talk more about what changes are needed to make sure humans and AI can work together as a team, in the most effective way.

Leaders need to figure out their roles in prepping their people for the future of work.

And have some fun while at it

Measure if your leadership actions are working!

Hej! I’m Rebecca, co-owner at Celpax. We use simple tech tools to measure and create a great work environment. And build a better society while at it! Interested in the Future of Work and Artificial Intelligence? Let’s chat on Twitter.

Hej! I’m Rebecca, co-owner at Celpax. We use simple tech tools to measure and create a great work environment. And build a better society while at it! Interested in the Future of Work and Artificial Intelligence? Let’s chat on Twitter.

Similar interests

- Non Gamstop Casinos UK

- Slot Sites UK

- New Betting Sites UK

- Casinos Not On Gamstop

- Gambling Sites Not On Gamstop

- Casino Non Aams Sicuri

- UK Online Casinos Not On Gamstop

- Gambling Sites Not On Gamstop

- Sites Not On Gamstop

- Sites Not On Gamstop

- Non Gamstop Casino Sites UK

- Best Online Casino Canada

- UK Casino Not On Gamstop

- Non Gamstop Casinos

- Casinos Not On Gamstop

- Siti Scommesse

- Top Casino Sites UK

- Slots Not On Gamstop

- Casino En Ligne Meilleur Site

- Casinos Not On Gamstop

- Betting Sites UK

- Slots Not On Gamstop

- UK Casino Not On Gamstop

- Migliori Casino Online Non Aams

- Non Gamstop Casino UK

- Lista Casino Online Non Aams

- Meilleur Casino En Ligne

- Best Crypto Casino

- Casino En Ligne

- Casino Online

- Casino En Ligne France

- Paris Sportif Ufc

- Casino Jeux En Ligne

- Meilleur Casino En Ligne

- Bonus Free Spin Senza Deposito

- Casinò Non Aams Con Free Spin Senza Deposito

- Nuovi Casino Non Aams

- Migliori Casino Online

- Crypto Casino

- Casino Non Aams